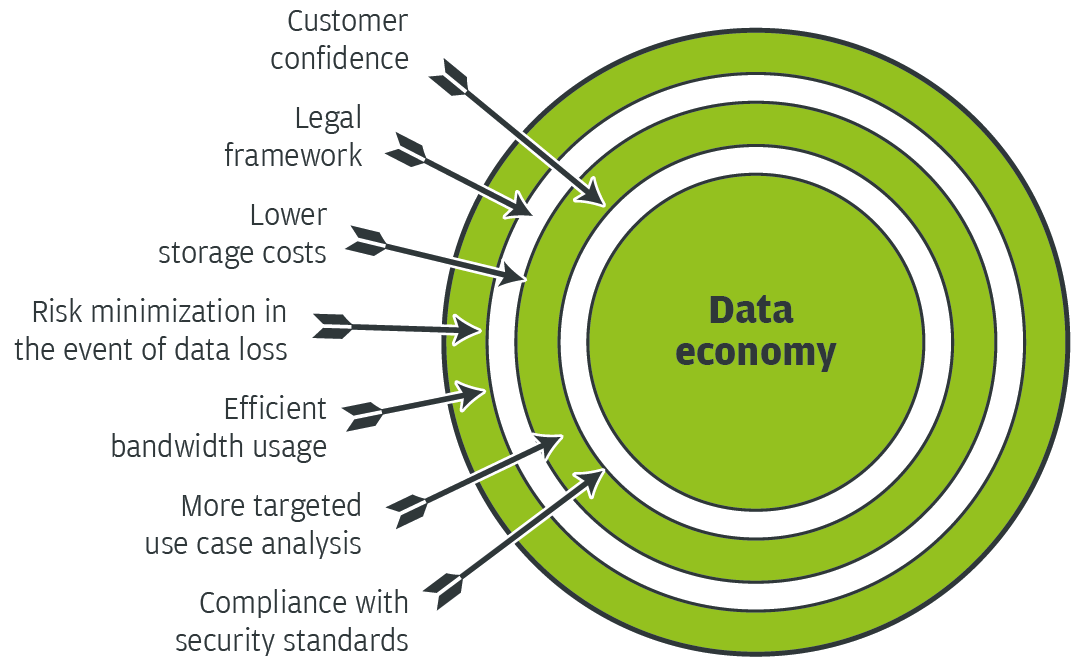

Data economy should be paramount in the development of products and solutions for the automotive market. The economic use of customer and vehicle data ultimately benefits the OEMs themselves.

Market research company Gartner predicts that by 2020, about 250 million connected cars will be on the road worldwide. However, customer surveys show that, despite all the benefits this connectivity brings, users are very concerned about its impact on their privacy. Nowadays, data is considered a currency of its own. The possibilities for monetizing customer data are virtually endless. They range from predicting available parking spaces and enriching map data to inventing new driver assistance functions.

Connecting vehicle sensors to cloud-based analysis methods and services results in many potential applications aimed at different target groups: the OEM itself, for example for the further development of its products, suppliers at all levels, authorized dealers and workshops and, last but not least, the end customer, i.e., the driver or registered owner.

But, with the fierce competition in today’s industry, customer confidence is a key factor for automobile manufacturers.

This creates a dilemma for companies and service providers: On the one hand, the use of data promises new business and revenue models. On the other hand, disappointing customers or, even worse, a privacy scandal would be disastrous for the company’s image and have serious legal consequences.

These issues are made even more complex as new big data analysis capabilities can easily associate supposedly anonymized data with specific individuals.

Automobile manufacturers and data service providers need to keep this in mind when handling customer identities, floating car data and communications metadata.

A strategy of self-restraint is recommended.

Anonymization is more complex than it seems

Some people think that movement profiles, geolocation or floating car data can be sufficiently pseudonymized by removing personal data such as names or customer numbers. When looking at the analysis methods available today, this concept is doomed to failure.

Based on regular drives between home and workplace, it is not difficult to subsequently associate floating car data with a specific individual.

Through “fingerprints”, even isolated diagnostic data can be linked to a single vehicle and therefore its driver. The possible examples are countless: separating useful data that is of high value to certain applications or analysis from the person who “owns” or “caused” it is a far greater challenge than one might at first think.

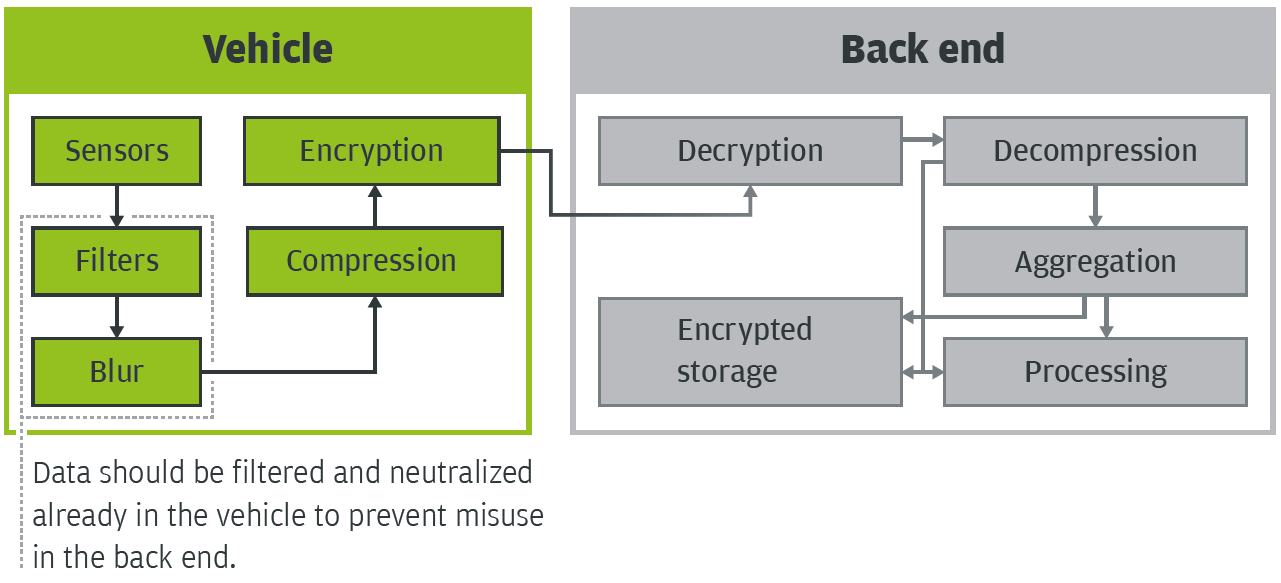

If privacy and the protection of the individual customer’s personal data are to be ensured, security or data economy must be considered from the outset when designing an application. For example, a common strategy is to separate authentication and services. Then a service provided online knows only that it received an authorized request, it does not know who is behind it.

Nevertheless, metadata such as the IP address can reveal the user – which means it must be removed. Anonymization can be further improved by recording and storing only specific segments of a route and not complete routes. For time stamps, there is an approach to completely remove the day’s date and store only hours and minutes.

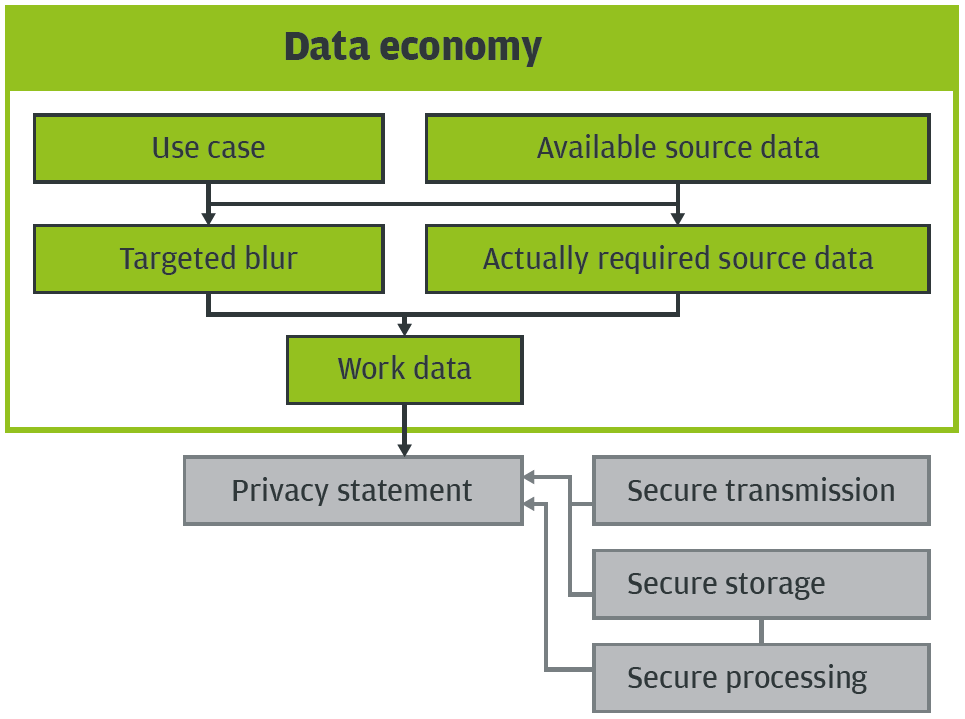

In this case, the use of the data in applications – for example, for utilization profiles for route segments – must be designed, right from the start, such that it gets by with this neutralized data.

However, engineers and developers should be aware that such actions only improve the security level. “There is no such thing as absolute security”, a motto used by privacy specialists, may sound like a cliché, but it is basically true.

Adequate amounts of efforts and resources enable anyone to associate even chopped up, anonymized, pseudonymized data, or data with “neutralized” time stamps with particular individuals.

Broad range of risks

Now, one might ask what is the risk of subsequently removing privacy practices. To answer this question, the experts responsible should keep an eye out for potential threats. This starts with business and cooperation partners that may be located in another jurisdiction or have implemented less comprehensive privacy safeguards in their companies.

For some U.S. companies for example, in-depth analysis of personal data is not only at the core of their business model. In addition, their home country’s privacy regulations are much weaker than, for instance, Europe’s.

When data that, for example, a German manufacturer collects from its customers is used under these circumstances, this could be considered as misuse by European legal professionals. This would not only have a severe impact on the company’s reputation, but it could also result in serious legal consequences.

For example, the European General Data Protection Regulation sets fines up to 20 million euros or up to 4% of the total worldwide annual turnover of the preceding financial year. No less alarming is the prospect that personal data could fall into the wrong hands, for example, due to hacker attacks. In recent years, a number of wellknown companies have become victims of major data theft.

Considering that a company’s own employees or persons close to the firm are in many cases responsible for attacks and data theft, these risks are even more difficult to assess.

In a study published in 2015, “Spionage, Sabotage und Datendiebstahl – Wirtschaftsschutz im digitalen Zeitalter [Espionage, sabotage and data theft – economic security in the digital age]”, the German industry association Bitkom presents the following findings: In cases from 2013 and 2014, with a total of 550 companies affected, 52% of the suspected perpetrators are current or former employees and 39% are close to the company.

Compared to this, the percentage of organized crime, hobby hackers and foreign intelligence agencies is significantly lower.

Aside from direct financial losses such as claims for damages and fines, the loss of reputation caused when such cases are brought to light can be much more damaging in the long run.

Beware of granting rights “preemptively”

However, these effects, too, are only one aspect of the problem. Another aspect that involves both legal and strategic mantraps is the lawful use of customer data protected by information technology. The German Federal Data Protection Act (BDSG) and the corresponding European directives require that customers be provided with detailed information about which data is collected for which purpose.

This information is usually provided in user agreements that must be agreed upon or, for example as part of a purchase or lease agreement, signed by the customer. For their own protection, providers should clearly state on which technological basis they offer their specific services. However, there is a conflict of aims regarding future business models or extensions of existing services.

Each service that is provided online involves the risk that the data it collects is used for other purposes than those agreed upon by the user. This also includes the transfer of data to third parties without explicit consent. There are various reasons for this, ranging from ignorance on the service provider’s part, sloppiness and cuts in security, to business models that are contrary to customers’ interests.

In case that future applications or business models require additional data, companies that operate diligently could consider integrating, from the outset, extensive rights into their user agreements. But this approach, too, has high risks. On the one hand, there are legal risks as, particularly Germany’s, privacy jurisdiction is often rather strict.

On the other hand, there are risks to the company’s image – a good example is the scandal involving a renowned Korean TV maker. The terms of use of the company’s smart TVs granted the manufacturer the right to record any word spoken in front of the device and analyze it in its data centers.

It is easy to imagine the impact that a similar idea would have in conjunction with cloud-based voice recognition in the car.

Focus on legal certanity and customer protection

It is therefore necessary to constantly weigh up privacy requirements and data-based business models. The only really safe way of preventing data from falling into the wrong hands is to make sure that it is not generated in the first place.

What recommendations for action result from this? As already mentioned above, anonymizing or neutralizing data such that it provides an increased level of protection requires considerable technological effort.

Companies should see this effort as an investment in their reputation and future customer relationships. When designing services and applications, privacy should play a key role from the very beginning.

Applications and solutions should be designed such that they use only as much data as is necessary for the specific product. Collecting and storing data “to have it in stock” or “on spec” is highly problematic and should be avoided at all costs.

Companies should use detailed internal guidelines to specify all aspects of handling and using customer data. This also includes appointing a privacy officer and providing this employee with sufficient authority. Customers will reward such efforts by continued brand loyalty.